Crafting AI Agents.A Guide to Building and Validating with an MCP Server - PART I

Crafting AI Agents: A Guide to Building and Validating with an MCP Server

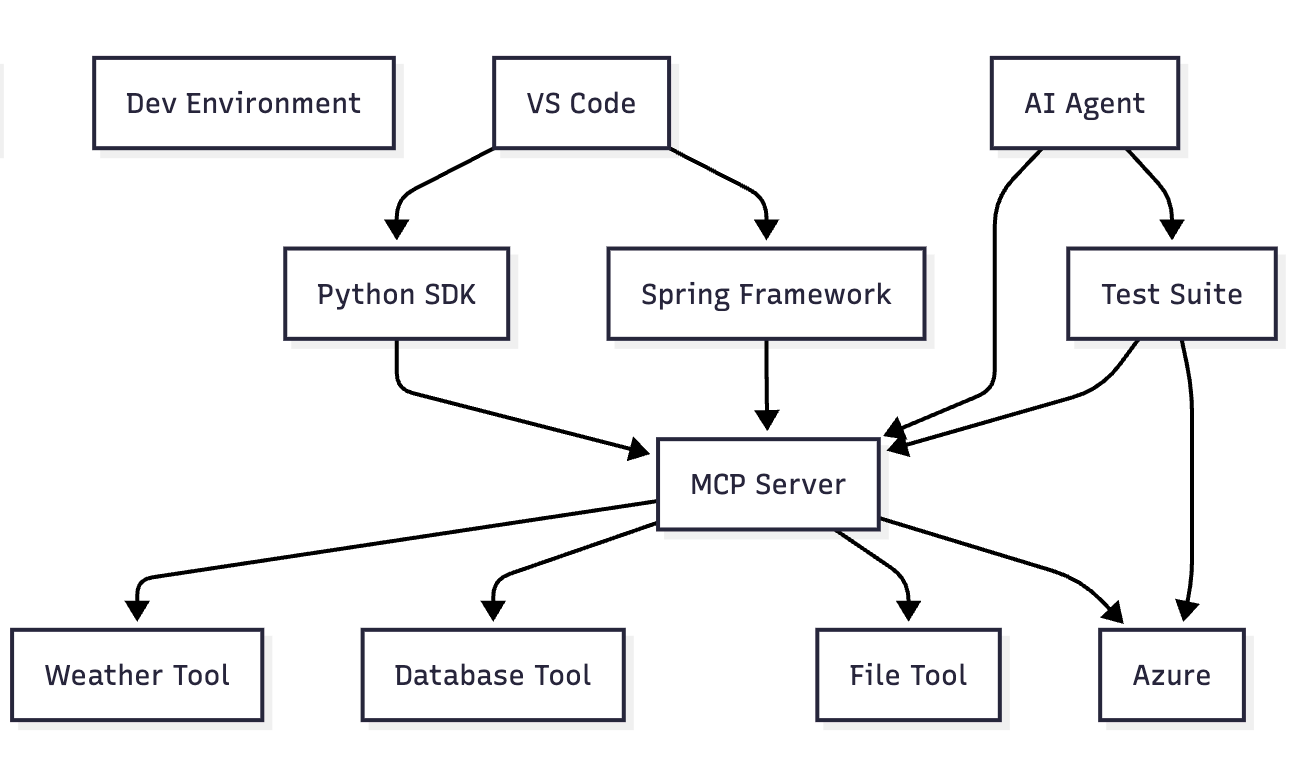

An MCP (Model Context Protocol) server is a key component in building AI agents. It’s a standardized way for an AI agent to access external tools, services, and data sources, allowing the agent to perform actions beyond its core programming. Think of it as a smart adapter that translates an AI agent’s request into commands a specific tool understands, like a GitHub API or a local file system.

Getting Started with an MCP Server and AI Agent

To begin, you’ll need to set up a development environment. A common approach is using a code editor like VS Code and a language like Python or TypeScript. You can also use Java and the Spring Framework to build an MCP server.

Understand the Architecture: The basic setup involves an MCP Client, which is the AI agent itself, and an MCP Server. The client sends requests to the server, and the server provides the tools and context the agent needs to complete a task.

Choose a Framework: Start with an existing framework or SDK for building an MCP server. For instance, the Python MCP SDK is a popular choice for developers using Python. For Java developers, the Spring Framework is an excellent choice. Spring’s robust and flexible nature makes it ideal for building RESTful APIs that can act as an MCP server.

Define Your Tools: The core of your MCP server is the set of tools it exposes. A tool is a specific action the AI agent can take, such as “get current weather,” “search a database,” or “create a file.” Each tool has a defined name, description, and input parameters.

Set Up the Server: With your tools defined, you can then instantiate and run the MCP server. This can be a local server for testing or a remote one hosted on a Tanzu Platform or cloud AI services for broader use. With Spring, you’ll use annotations like @RestController and @GetMapping to expose your tools as web services.

Building, Validating, Testing, and Iterating

Building a robust AI agent is an iterative process, not a linear one. It involves continuous cycles of development, testing, and refinement.

Building and Iterating

Start Small: Begin with a minimal viable product (MVP). Create a simple agent with one or two tools to prove the concept. For example, a weather agent with a single get_forecast tool.

Add Complexity Incrementally: Once your MVP works, add more features and tools in small, manageable sprints. This agile approach allows you to expand the agent’s capabilities while keeping the project manageable.

Use Feedback Loops: An AI agent’s development should include a constant feedback mechanism. This can be user feedback, but it also includes the agent’s own ability to reflect and learn from its actions.

Learn from Mistakes: When an agent fails, it should store that data and use it to improve its performance in future attempts. This is a core part of the “learning agent” model.

Validation and Testing

Develop Test Cases: Create a set of test cases that cover all the possible paths and functions of your agent. These should include not only successful scenarios but also edge cases and potential failure points.

Simulate Interactions: Use synthetic data or even another AI model to act as a simulated user. This stress-tests the agent’s ability to handle complex, multi-turn conversations and unpredictable inputs.

Evaluate Agent Behavior: Go beyond just checking the final output. Analyze the agent’s internal thought process, its path to a solution, and its choice of tools. Look for signs of “path errors,” where the agent gets stuck in a loop or takes unnecessary steps.

Use an LLM-as-a-Judge: A popular method for evaluating agent performance is to use a separate, powerful language model to act as a judge. It can score the agent’s responses based on criteria like accuracy, relevance, and helpfulness.

Continuous Integration/Continuous Deployment (CI/CD): Integrate your testing pipeline into your CI/CD workflow. This ensures that every time you make a change, the agent is automatically tested against your defined criteria before it’s released into production.